sleepcycles

Define sleep & wake up cycles for your Kubernetes resources. Automatically schedule to shutdown Deployments, CronJobs, StatefulSets and HorizontalPodAutoscalers that occupy resources in your cluster and wake them up only when you need them; in that way you can:

- schedule resource-hungry workloads (migrations, synchronizations, replications) in hours that do not impact your daily business

- depressurize your cluster

- decrease your costs

- reduce your power consumption

- lower you carbon footprint

Getting Started

You’ll need a Kubernetes cluster to run against. You can use KIND or K3D to get a local cluster for testing, or run against a remote cluster.

[!CAUTION] Earliest compatible Kubernetes version is 1.25

Samples

Under config/samples you will find a set manifests that you can use to test this sleepcycles on your cluster:

SleepCycles

- core_v1alpha1_sleepcycle_app_x.yaml, manifests to deploy 2

SleepCycleresources in namespacesapp-1andapp-2

apiVersion: core.rekuberate.io/v1alpha1

kind: SleepCycle

metadata:

name: sleepcycle-app-1

namespace: app-1

spec:

shutdown: "1/2 * * * *"

shutdownTimeZone: "Europe/Athens"

wakeup: "*/2 * * * *"

wakeupTimeZone: "Europe/Dublin"

enabled: true

[!NOTE] The cron expressions of the samples are tailored so you perform a quick demo. The

shutdownexpression schedules the deployment to scale down on odd minutes and thewakeupschedule to scale up on even minutes.

Every SleepCycle has the following mandatory properties:

shutdown: cron expression for your shutdown scheduleenabled: whether this sleepcycle policy is enabled

and the following non-mandatory properties:

shutdownTimeZone: the timezone for your shutdown schedule, defaults toUTCwakeup: cron expression for your wake-up schedulewakeupTimeZone: the timezone for your wake-up schedule, defaults toUTCsuccessfulJobsHistoryLimit: how many completed CronJob Runner Pods to retain for debugging reasons, defaults to1failedJobsHistoryLimit: how many failed CronJob Runner Pods to retain for debugging reasons, defaults to1runnerImage: the image to use when spawn CronJob Runner pods, defaults toakyriako78/rekuberate-io-sleepcycles-runners

[!IMPORTANT] DO NOT ADD seconds or timezone information to you cron expressions.

Demo workloads

- whoami-app-1_x-deployment.yaml, manifests to deploy 2

Deploymentthat provisions traefik/whoami in namespaceapp-1 - whoami-app-2_x-deployment.yaml, manifests to deploy a

Deploymentthat provisions traefik/whoami in namespaceapp-2

SleepCycle is a namespace-scoped custom resource; the controller will monitor all the resources in that namespace that

are marked with a Label that has as key rekuberate.io/sleepcycle: and as value the name of the manifest you created:

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-2

namespace: app-2

labels:

app: app-2

rekuberate.io/sleepcycle: sleepcycle-app-2

spec:

replicas: 9

selector:

matchLabels:

app: app-2

template:

metadata:

name: app-2

labels:

app: app-2

spec:

containers:

- name: app-2

image: traefik/whoami

imagePullPolicy: IfNotPresent

[!IMPORTANT] Any workload in namespace

kube-systemmarked withrekuberate.io/sleepcyclewill be ignored by the controller by design.

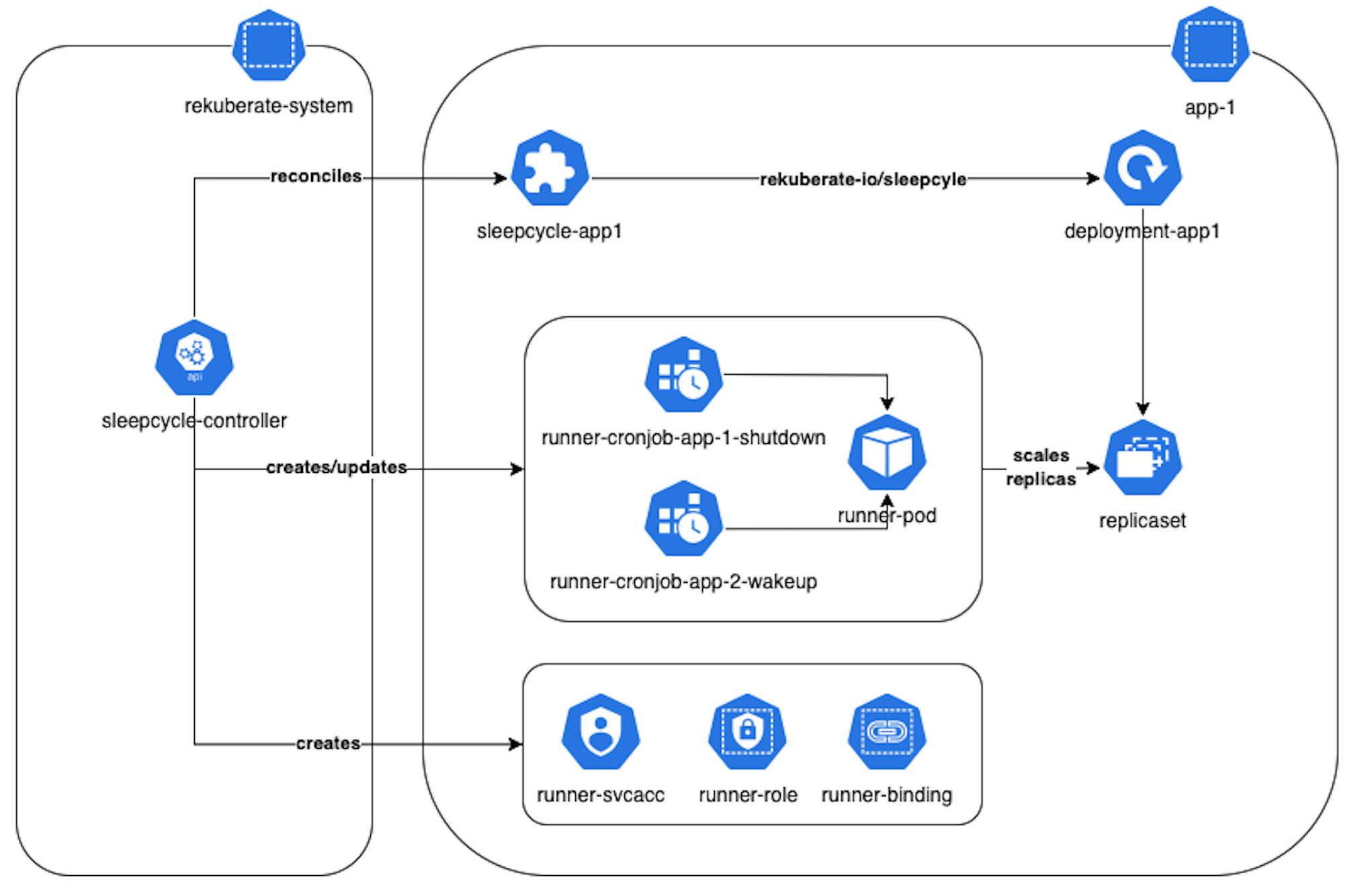

How it works

The diagram below describes how rekuberate.io/sleepcycles are dealing with scheduling a Deployment:

- The

sleepcycle-controllerwatches periodically, every 1min, all theSleepCyclecustom resources for changes (in all namespaces). - The controller, for every

SleepCycleresource within the namespaceapp-1, collects all the resources that have been marked with the labelrekuberate.io/sleepcycle: sleepcycle-app1. - It provisions, for every workload - in this case deployment

deployment-app1aCronJobfor the shutdown schedule and optionally a secondCronJobif a wake-up schedule is provided. - It provisions a

ServiceAccount, aRoleand aRoleBindingper namespace, in order to make possible for runner-pods to update resources’ specs. - The

Runnerpods will be created automatically by the cron jobs and are responsible for scaling the resources up or down.

[!NOTE] In the diagram it was depicted how

rekuberate.io/sleepcyclesscalesDeployment. The same steps count for aStatefulSetand aHorizontalPodAutoscaler. There are two exception though:

- a

HorizontalPodAutoscalerwill scale down to1replica and not to0as for aDeploymentor aStatefulset.- a

CronJobhas no replicas to scale up or down, it is going to be enabled or suspended respectively.

Deploy

From sources

- Build and push your image to the location specified by

IMGinMakefile:

# Image URL to use all building/pushing image targets

IMG_TAG ?= $(shell git rev-parse --short HEAD)

IMG_NAME ?= rekuberate-io-sleepcycles

DOCKER_HUB_NAME ?= $(shell docker info | sed '/Username:/!d;s/.* //')

IMG ?= $(DOCKER_HUB_NAME)/$(IMG_NAME):$(IMG_TAG)

RUNNERS_IMG_NAME ?= rekuberate-io-sleepcycles-runners

KO_DOCKER_REPO ?= $(DOCKER_HUB_NAME)/$(RUNNERS_IMG_NAME)

make docker-build docker-push

- Deploy the controller to the cluster using the image defined in

IMG:

make deploy

and then deploy the samples:

kubectl create namespace app-1

kubectl create namespace app-2

kubectl apply -f config/samples

Uninstall

make undeploy

Using Helm (from sources)

If you are on a development environment, you can quickly test & deploy the controller to the cluster

using a Helm chart directly from config/helm:

helm install rekuberate-io-sleepcycles config/helm/ -n <namespace> --create-namespace

and then deploy the samples:

kubectl create namespace app-1

kubectl create namespace app-2

kubectl apply -f config/samples

Uninstall

helm uninstall rekuberate-io-sleepcycles -n <namespace>

Using Helm (from repo)

On the other hand if you are deploying on a production environment, it is highly recommended to deploy the controller to the cluster using a Helm chart from its repo:

helm repo add sleepcycles https://rekuberate-io.github.io/sleepcycles/

helm repo update

helm upgrade --install sleepcycles sleepcycles/sleepcycles -n rekuberate-system --create-namespace

and then deploy the samples:

kubectl create namespace app-1

kubectl create namespace app-2

kubectl apply -f config/samples

Uninstall

helm uninstall rekuberate-io-sleepcycles -n <namespace>

Develop

This project aims to follow the Kubernetes Operator pattern. It uses Controllers which provides a reconcile function responsible for synchronizing resources until the desired state is reached on the cluster.

Controller

Modifying the API definitions

If you are editing the API definitions, generate the manifests such as CRs or CRDs using:

make generate

make manifests

then install the CRDs in the cluster with:

make install

[!TIP] You can debug the controller in the IDE of your choice by hooking to the

main.goor you can start the controller without debugging with:

make run

[!TIP] Run

make --helpfor more information on all potentialmaketargets More information can be found via the Kubebuilder Documentation

Build

You always need to build a new docker container and push it to your repository:

make docker-build docker-push

[!IMPORTANT] In this case you will need to adjust your Helm chart values to use your repository and container image.

Runner

Build

make ko-build-runner

[!IMPORTANT] In this case you will need to adjust the

runnerImageof yourSleepCyclemanifest to use your own Runner image.

Uninstall CRDs

To delete the CRDs from the cluster:

make uninstall